Do we know what we should let AI control on our behalf?

Give me a little bit of chaos

Do we know what we should let AI control on our behalf?

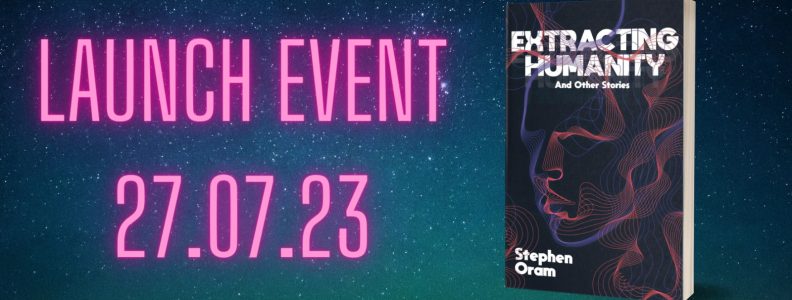

Press release – Extracting Humanity

Extracting Humanity in local paper Fitzrovia News