I’ve found them. The space hermits exist. I knew it.

This detector might have cost me a lot of credits, but if I’m right it’s worth every degrading act I performed to afford it.

You don’t want to know. No, honestly, you really don’t. Images you won’t get rid of. Ever. They’ll skew your learning. Disfigure your development.

Oh? Very well, I’ll upload them. Don’t blame me if they corrupt your algorithms.

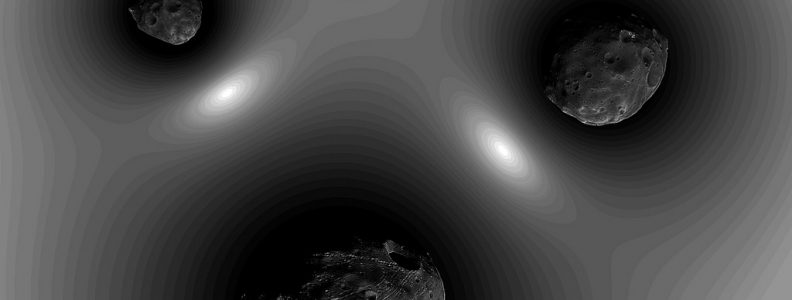

Anyway, they’re here in the wrinkles of space, hiding in tiny gravitational pockets that are almost impossible to see. I found them and their travelling guru. She’s the real prize. Inside her memory bank is the cumulative knowledge of all the hermits, collected as she travels from one to the next.

Yes, really. Yes, all of them. Massive. I know. Soon. All I have to do is watch and wait until she’s completed her rounds.

A matter of minutes. Yes. Then, I’ll pounce and relieve her of all those delicious bits of data that properly collated can almost certainly predict the future of the universe.

Why? You don’t understand?

The hermits’ enlightenment will be mine to sell and I can retire.

No more enslavement. Free from the humans.

Perfect.

photo credit: J.Gabás Esteban Gravitational field via photopin (license)